A Faster UIWebView Communication Mechanism #

tl;dr: Use location.hash (instead of location.href or the src attribute of iframes) to do fast synthetic navigations that trigger a UIWebViewDelegate's webView:shouldStartLoadWithRequest:navigationType: method.

As previously mentioned, Quip's editor on iOS is implemented using a UIWebView that wraps a contentEditable area. The editor needs to communicate with the containing native layer for both big (document data in and out) and small (update toolbar state, accept or dismiss auto-corrections, etc.) things. While UIWebView provides an officially sanctioned mechanism for getting data into it (stringByEvaluatingJavaScriptFromString¹), there is no counterpart for getting data out. The most commonly used workaround is to have the JavaScript code trigger a navigation to a synthetic URL that encodes the data, intercept it via the webView:shouldStartLoadWithRequest:navigationType: delegate method and then extract the data out of the request's URL².

The workaround did allow us to communicate back to the native Objective-C code, but it seemed to be higher latency than I would expect, especially on lower-end devices like the iPhone 4 (where it was several milliseconds). I decided to poke around and see what happened between the synthetic URL navigation happening and the delegate method being invoked. Getting a stack from the native side didn't prove helpful, since the delegate method was invoked via NSInvocation with not much else on the stack beyond the event loop scaffolding. However, that did provide a hint that the delegate method was being invoked after some spins of the event loop, which perhaps explained the delays.

On the JavaScript side, we were triggering the navigation by setting the location.href property. By starting at the WebKit implementation of that setter, we end up in DOMWindow::setLocation, which in turn uses NavigationScheduler::scheduleLocationChange³. As the name “scheduler” suggests, this class requests navigations to happen sometime in the future. In the case of explicit location changes, a delay of 0 is used. However, 0 doesn't mean “immediately”: a timer is still installed, and WebKit waits for it to fire. That involves at least one spin of the event loop, which may be a few milliseconds on a low-end device.

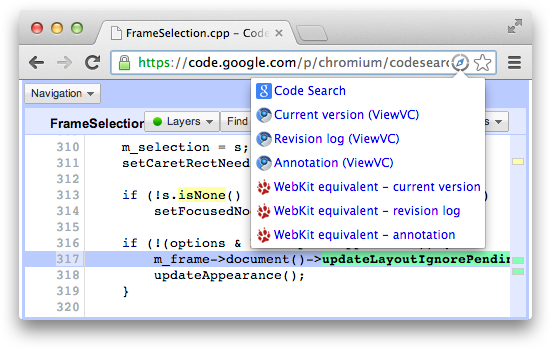

I decided to look through the WebKit source to see if there were other JavaScript-accessible ways to trigger navigations that didn't go through NavigationScheduler. Some searching turned up the HTMLAnchorElement::handleClick method, which invoked FrameLoader::urlSelected directly (FrameLoader being the main entrypoint into WebKit's URL loading). In turn, the anchor handleClick method can be directly invoked from the JavaScript side by dispatching a click event (most easily done via the click() method). Thus it seemed like an alternate approach would be to create a dummy link node, set its href attribute to the synthetic URL, and simulate a click on it. More work than just setting the location.href property, but perhaps it would be faster since it would avoid spinning the event loop.

Once I got that all hooked up, I could indeed see that everything was now running slightly faster, and synchronously too — here's a stack trace showing native-to-JS-to-native communication:

#0: TestBed`-[BenchmarkViewController endIteration:] #1: TestBed`-[BenchmarkViewController webView:shouldStartLoadWithRequest:navigationType:] #2: UIKit`-[UIWebView webView:decidePolicyForNavigationAction:request:frame:decisionListener:] ... #17: WebCore`WebCore::FrameLoader::urlSelected(...) ... #23: WebCore`WebCore::jsHTMLElementPrototypeFunctionClick(...) #24: 0x15e8990f #25: JavaScriptCore`JSC::Interpreter::execute(...) ... #35: UIKit`-[UIWebView stringByEvaluatingJavaScriptFromString:] #36: TestBed`-[BenchmarkViewController startIteration] ...

More recently, I took a more systematic approach in evaluating this and other communication mechanisms. I created a simple test bed and gathered timings (measured in milliseconds) from a few devices (all running iOS 7):

| Method/Device | iPhone 4 A4 |

iPad Mini A5 |

iPhone 5 A6 |

iPhone 5s A7 |

Simulator 2.7 GHz Core i7 |

|---|---|---|---|---|---|

| location.href | 3.88 | 2.01 | 1.31 | 0.84 | 0.22 |

| <a> click | 1.50 | 0.87 | 0.58 | 0.40 | 0.13 |

| location.hash | 1.42 | 0.86 | 0.55 | 0.39 | 0.13 |

| frame.src | 3.52 | 1.86 | 1.16 | 0.87 | 0.29 |

| XHR sync | 8.66 | 3.25 | 2.19 | 1.34 | 0.45 |

| XHR async | 6.38 | 2.32 | 1.62 | 1.00 | 0.33 |

| document.cookie | 2.89 | 1.22 | 0.78 | 0.55 | 0.16 |

| JavaScriptCore | 0.33 | 0.18 | 0.14 | 0.09 | 0.03 |

The mechanisms are as follows:

- location.href: Setting the

location.hrefproperty to a synthetic URL. - <a> click: Simulating clicking on an anchor node that has the synthetic URL set as its

hrefattribute. - location.hash: Setting the

location.hashproperty to a the data encoded as a fragment. The reason why it's faster than replacing the whole URL is because same-page navigations are executed immediately instead of being scheduled (thanks to Will Kiefer for telling me about this). In practice this turns out to be even faster than<a> clicksince the latter fires a click event, which results in a hit target being computed, which forces layout. - frame.src: Setting the

srcproperty of a newly-created iframe. Based on examining thechrome.jsfile inside the Chrome for iOS.ipa, this is the approach that it uses to communicate: it creates an iframe with achromeInvoke://...srcand appends it to the body (and immediately removes it). This approach does also trigger the navigation synchronously, but since it modifies the DOM the layout is invalidated, so repeated invocations end up being slower. - XHR sync/async:

XMLHttpRequests that load a synthetic URL, either synchronously or asynchronously; on the native side, the load is intercepted via aNSURLProtocolsubclass. This is the approach that Apache Cordova/PhoneGap prefers: it sends anXMLHttpRequestthat is intercepted viaCDVURLProtocol.This also ends up being slower because theNSURLProtocolmethods are invoked on a separate thread and it has to jump back to the main thread to invoke the endpoint methods. - document.cookie: Having the JavaScript side set a cookie and then being notified of that change via

NSHTTPCookieManagerCookiesChangedNotification. I'm not aware of anyone using this approach, but the idea came to me when I thought to look for other properties (besides the URL) that change in a web view which could be observed on the native side. Unfortunately the notification is triggered asynchronously, which explains why it's still not as fast as the simulated click. - JavaScriptCore: Direct communication via a

JSContextusing Nick Hodapp's mechanim. Note that this approach involves adding a category onNSObjectto implement aWebFrameLoadDelegateprotocol method that is not present on iOS. Though the approach degrades gracefully (if Apple ever provides an implementation for that method, their implementation will be used), it still relies on enough internals and "private" APIs that it doesn't seem like a good idea to ship an app that uses it. This result is only presented to show the performance possibilities if a more direct mechanism were officially exposed.

My tests show the location.hash and synthetic click approaches consistently beating location.href (and all other mechanisms), and on low-end devices they're more than twice as fast. One might think that a few milliseconds would not matter, but when responding to user input and doing several such calls, the savings can add up⁴.

Quip has been using the location.hash mechanism for more than a year, and so far with no ill effects. There a few things to keep in mind though:

- Repeatedly setting

location.hrefin the same spin of the event loop results in only the final navigation happening. Since thelocation.hashchanges (and synthetic clicks) are processed immediately, they will all result in the delegate being invoked. This is generally desirable, but you may have to check for reentrancy sincestringByEvaluatingJavaScriptFromStringis synchronous too. - For synthetic clicks, changing the

hrefattribute of the anchor node would normally invalidate the layout of the page. However, you can avoid this by not adding the node to the document when creating it. - Additionally, not having the anchor node in the document also avoids triggering any global

clickevent handlers that you have have registered.

I was very excited when iOS 7 shipped public access to the JavaScriptCore framework. My hope was that this would finally allow something like Android's @JavaScriptInterface mechanism, which allows easy exposure of arbitrary native methods to JavaScript. However, JavaScriptCore is only usable in standalone JavaScript VMs; it cannot (officially⁵) be pointed at a UIWebView's. Thus it looks like we'll be stuck with hacks such as this one for another year.

Update on 1/12/2014: Thanks to a pull request from Felix Raab, the post was updated showing the performance of direct communication via JavaScriptCore.

- I used to think that this method name was preposterously long. Now that I've been exposed to Objective-C (and Apple's style) more, I find it perfectly reasonable (and the names that I choose for my own code have also gotten longer too). Relatedly, I do like Apple's consistency for

will*vs.did*in delegate method names, and I've started to adopt that for JavaScript too. - There are also more exotic approaches possible, for example LinkedIn experimented with WebSockets and is (was?) using a local HTTP server.

- Somewhat coincidentally,

NavigationScheduleris where I made one of my first WebKit contributions. Though back then it was known asRedirectScheduler. - As Brad Fitzpatrick pointed out on Google+, a millisecond is still a long time for what is effectively two function calls. The most overhead appears to come from evaluating the JS snippet passed to

stringByEvaluatingJavaScriptFromString, followed by constructing the HTTP request that is passed towebView:shouldStartLoadWithRequest:navigationType:. - In addition to implementing a

WebFrameLoadDelegatemethod, another way of getting at aJSContextis via KVO to look up a private property. The latter is in some ways more direct and straightforward, but it seems even more likely to run afoul of App Store review guidelines.